| |

Understanding the arm navigation Planning Scene Architecture

Description: This tutorial will go into the new formulation of arm navigation 1.0, focusing particularly on the Planning Scene. It will describe what it is, the design philosophy behind it, and how to use it in different contexts.Keywords: planning scene electric arm_navigation

Tutorial Level: ADVANCED

Contents

Overview

The architecture of the arm_navigation stack for electric is designed with the idea that there are situations where a user may want to do planning outside the context of the current physical state of a robot. Previous incarnations of arm_navigation were largely intended to address the scenario of a user desiring to move from the current configuration of a robot to a different configuration in a collision-free manner. For arm_navigation 1.0 we have adopted a somewhat different methodology that not only supports this previous use case but also a number of new use cases:

- A user wants to construct a plan from a state that is not the current state of the robot (e.g. I want to make sure that once the robot picks up this mug it can safely move it to a target location).

A user wants to invoke the different components of the system outside of the context of running simulation or a real robot (for tools such as the Planning Components Visualizer

- A user wants to store interesting instances where arm navigation was invoked for offline visualize, debugging, or metrics analysis.

Additionally, we wanted to make the system more efficient by allow users to set up a complete world state and then make repeated calls to components given that world state - many requests to IK testing different grasps, for instance, or high-level planners invoking motion planning repeatedly to evaluate different scenarios.

We implemented these use cases, and this idea of a complete world state using the PlanningScene message. This message represents a complete world state for the purposes of arm_navigation components like IK, planners, and trajectory filters. Passing a component a PlanningScene allows it to set its internal world state to reflect the full state of the world; the components were all re-written using C++ classes from the planning_environment stack to generate world state from PlanningScene rather than from sources associated with a running system, like TF, JointState messages, or the current CollisionMap. The PlanningScene can be set from the current world state, reproducing the former functionality of arm_navigation, but can also be set to correspond to a future state, created on the fly, or loaded from a database or other storage mechanism. By divorcing the arm_navigation components from the current world state we can support all of the new use cases.

In the rest of this tutorial we will discuss the system design in more detail.

System Layout Without a Simulated or Physical Robot

One of the new modes that is enabled by the new system architecture is running arm_navigation without simulation or a real robot - this allows users to interact with planning scene independently from depending on systems of controllers, mechanism interfaces publishing joint states, TF, or odometry that are provided by simulated or physical robots. Running the system in this mode allows for testing and visualization of the planning components, though does not support substantially interacting with perception or the controller/execution framework of a working system.

This diagram shows the system layout when running without a simulated or real robot. The environment_server node is the center of the system, but is run with both the use_monitor and use_collision_map parameters set to false. These signal that the environment_server should not expect to receive messages from a running system in terms of mechanism state or perception.

When the arm_navigation components, like IK, planners, or trajectory filters, are launched they connect to the environment server using an Empty.srv registration method - this all happens automatically by having these nodes contain a CollisionModelsInterface class (see collision_models_interface.h for more information). The environment_server uses the node data contained in this service method to create a SyncPlanningScene.action client back to the component nodes. This creates a pathway for PlanningScene information to move through the environment_server to the nodes - this is their sole connection to the world state.

The planning_components_visualizer then connects to the environment server with the SetPlanningSceneDiff.action. This action contains a full PlanningScene message in the field planning_scene_diff - in a running system this field can be used to supplement or change the current state when running alongside a simulated or real robot. When the use_monitor parameter of the environment_server is set to false, however, the planning_scene_diff field is assumed to contain the full planning scene specification, as there is no other source of world state information. Initially the planning_components_visualizer seeds the PlanningScene with nothing except the default robot state - the current pose of the robot joints are required to be included; it then calls the SetPlanningSceneDiff action in the environment_server. When the environment_server receives this message it constructs SyncPlanningScene.action goals with the proper fields filled out given the planning_scene_diff and calls the action clients for all registered arm_navigation components. These components can then set their internal state given the new planning scene, and will be ready to respond to service or action calls. These components MUST BE initialized with a PlanningScene or they will not be able to function fully or at all. After all the actions execute and return the planning_components_visualizer updates its own internal state with the new planning scene and can make calls to the arm_navigation components, as all components are guaranteed to contain the same notion of the world state.

When the user takes certain actions in the planning_components_visualizer that affect the world state, such as adding or moving a collision object pole, this information must be passed through the environment server to the arm_navigation components using the SetPlanningSceneDiff.action. Changing the robot's internal state, such as moving an arm, does not require updating the PlanningScene, as this information can be passed in the kinematics or motion plan requests themselves.

Note that the planning_components_visualizer.launch file produced by the Planning Description Configuration Wizard contains some additional nodes that are necessary for visualization robot state in Rviz, such as a robot_state_publisher. This is a product of the visualization requirements, and not an essential component of the arm_navigation system.

System Layout With a Simulated or Physical Robot

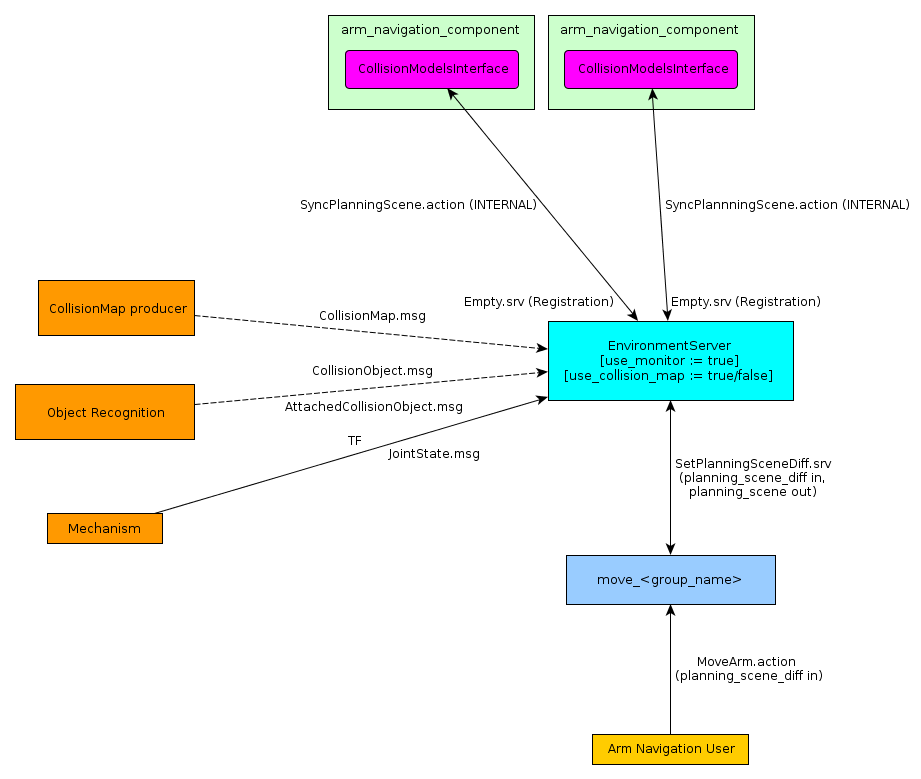

Running arm_navigation with a running system can be represented with this system layout:

The components that are supplied by external systems are in orange on the left - the only essential information source is the mechanism messages for TF and joint_states. If the use_collision_map parameter for the environment_server is set to true there must be a supplier of the CollisionMap or the environment_server will not start. Object recognition is optional, but if there are publications the environment_server will incorporate them into the current world state.

The primary difference between the system and the one without a simulated or real robot is that the monitoring capability in the environment_server provides the arm_navigation system with a current world state.

In this mode the primary use comes through the move_<group_name> nodes. These nodes are supplied with a planning_scene_diff through the MoveArm.action. The move_<group_name> node then calls the SetPlanningSceneDiff action in the environment_server. The planning_scene_diff in this context can and frequently will be empty, which indicates to the environment_server that the current world state is the desired PlanningScene. It takes a snapshot of the current state and fills out a PlanningScene, passing this PlanningScene to the components. The components function in exactly the same manner as in the previous case - they operate based on the supplied PlanningScene without using TF or any other outside information.

Even in a running system the planning_scene_diff can be populated, however. For instance, a user may wish to set a virtual wall to constrain the space of available plans. This can be supplied in the planning_scene_diff, and all the arm_navigation components will respond accordingly. A more likely scenario is that another node will be running along-side move_<group_name> - a grasp planner, say. This node can also call SetPlanningScene.diff with a different robot or object position, and once the best sequence of actions has been determined can pass a series of goals to move_<group_name>.

Note that it is assumed that any resource contention for the arm_navigation system is being handled externally - if a number of different nodes are simultaneously attempting to call SetPlanningSceneDiff in the environment_server then there may be unspecified behavior, as the shared world state may not be as the calling nodes intend when making calls to the components.